Trustworthy Interaction-Aware Decision Making and Planning

Research Question

How will autonomous vehicles decide which action to take?

Summary

For autonomous systems, having the ability to predict the evolution of their surroundings is essential for safe, reliable, and efficient operation. Prediction is especially important when the autonomous system must interact and “negotiate” with humans, whether it be in settings that are cooperative, adversarial, or anywhere in between. This line of research involves quantifying the relative likelihoods of multiple, possibly highly distinct futures for interactive scenarios, planning strategies such that the autonomous agent is cognizant of how the human may respond, developing models that are offer transparency into the autonomous agent’s decision making process, and designing safe human-in-the-loop testing methodologies to validate our models and planning algorithms.

Related Works

-

B. Ivanovic, K. Leung, E. Schmerling, and M. Pavone, “Multimodal Deep Generative Models for Trajectory Prediction: A Conditional Variational Autoencoder Approach,” IEEE Robotics and Automation Letters, vol. 6, no. 2, pp. 295–302, Apr. 2021. (In Press)

Abstract: Human behavior prediction models enable robots to anticipate how humans may react to their actions, and hence are instrumental to devising safe and proactive robot planning algorithms. However, modeling complex interaction dynamics and capturing the possibility of many possible outcomes in such interactive settings is very challenging, which has recently prompted the study of several different approaches. In this work, we provide a self-contained tutorial on a conditional variational autoencoder (CVAE) approach to human behavior prediction which, at its core, can produce a multimodal probability distribution over future human trajectories conditioned on past interactions and candidate robot future actions. Specifically, the goals of this tutorial paper are to review and build a taxonomy of state-of-the-art methods in human behavior prediction, from physics-based to purely data-driven methods, provide a rigorous yet easily accessible description of a data-driven, CVAE-based approach, highlight important design characteristics that make this an attractive model to use in the context of model-based planning for human-robot interactions, and provide important design considerations when using this class of models.

@article{IvanovicLeungEtAl2020, author = {Ivanovic, B. and Leung, K. and Schmerling, E. and Pavone, M.}, title = {Multimodal Deep Generative Models for Trajectory Prediction: A Conditional Variational Autoencoder Approach}, journal = {{IEEE Robotics and Automation Letters}}, volume = {6}, number = {2}, pages = {295--302}, year = {2021}, note = {In Press}, month = apr, url = {https://arxiv.org/abs/2008.03880}, keywords = {press}, owner = {borisi}, timestamp = {2020-12-23} } -

B. Ivanovic, A. Elhafsi, G. Rosman, A. Gaidon, and M. Pavone, “MATS: An Interpretable Trajectory Forecasting Representation for Planning and Control,” in Conf. on Robot Learning, 2020.

Abstract: Reasoning about human motion is a core component of modern human-robot interactive systems. In particular, one of the main uses of behavior prediction in autonomous systems is to inform ego-robot motion planning and control. However, a majority of planning and control algorithms reason about system dynamics rather than the predicted agent tracklets that are commonly output by trajectory forecasting methods, which can hinder their integration. Towards this end, we propose Mixtures of Affine Time-varying Systems (MATS) as an output representation for trajectory forecasting that is more amenable to downstream planning and control use. Our approach leverages successful ideas from probabilistic trajectory forecasting works to learn dynamical system representations that are well-studied in the planning and control literature. We integrate our predictions with a proposed multimodal planning methodology and demonstrate significant computational efficiency improvements on a large-scale autonomous driving dataset.

@inproceedings{IvanovicElhafsiEtAl2020, author = {Ivanovic, B. and Elhafsi, A. and Rosman, G. and Gaidon, A. and Pavone, M.}, title = {{MATS}: An Interpretable Trajectory Forecasting Representation for Planning and Control}, booktitle = {{Conf. on Robot Learning}}, year = {2020}, month = nov, owner = {borisi}, timestamp = {2020-10-14}, url = {https://arxiv.org/abs/2009.07517} } -

T. Salzmann, B. Ivanovic, P. Chakravarty, and M. Pavone, “Trajectron++: Dynamically-Feasible Trajectory Forecasting With Heterogeneous Data,” in European Conf. on Computer Vision, 2020.

Abstract: Reasoning about human motion is an important prerequisite to safe and socially-aware robotic navigation. As a result, multi-agent behavior prediction has become a core component of modern human-robot interactive systems, such as self-driving cars. While there exist many methods for trajectory forecasting, most do not enforce dynamic constraints and do not account for environmental information (e.g., maps). Towards this end, we present Trajectron++, a modular, graph-structured recurrent model that forecasts the trajectories of a general number of diverse agents while incorporating agent dynamics and heterogeneous data (e.g., semantic maps). Trajectron++ is designed to be tightly integrated with robotic planning and control frameworks; for example, it can produce predictions that are optionally conditioned on ego-agent motion plans. We demonstrate its performance on several challenging real-world trajectory forecasting datasets, outperforming a wide array of state-of-the-art deterministic and generative methods.

@inproceedings{SalzmannIvanovicEtAl2020, author = {Salzmann, T. and Ivanovic, B. and Chakravarty, P. and Pavone, M.}, title = {Trajectron++: Dynamically-Feasible Trajectory Forecasting With Heterogeneous Data}, booktitle = {{European Conf. on Computer Vision}}, year = {2020}, address = {}, month = aug, owner = {borisi}, timestamp = {2020-09-14}, url = {https://arxiv.org/abs/2001.03093} } -

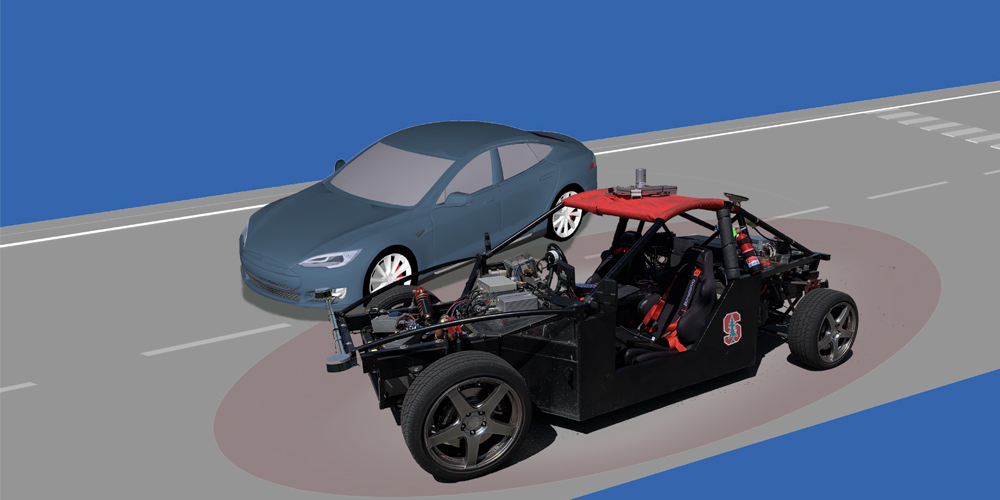

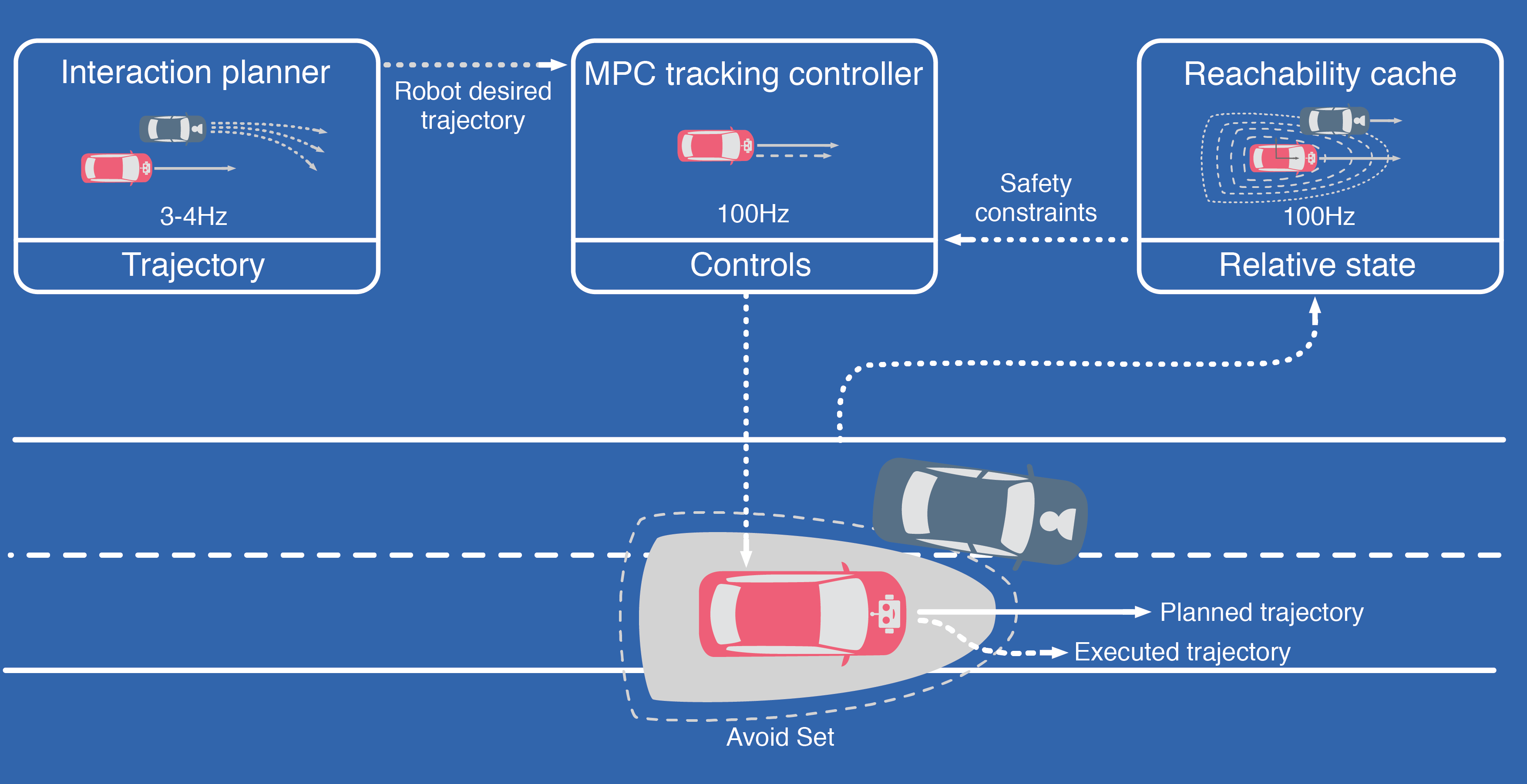

K. Leung, E. Schmerling, M. Zhang, M. Chen, J. Talbot, J. C. Gerdes, and M. Pavone, “On Infusing Reachability-Based Safety Assurance within Planning Frameworks for Human-Robot Vehicle Interactions,” Int. Journal of Robotics Research, vol. 39, no. 10–11, pp. 1326–1345, 2020.

Abstract: Action anticipation, intent prediction, and proactive behavior are all desirable characteristics for autonomous driving policies in interactive scenarios. Paramount, however, is ensuring safety on the road—a key challenge in doing so is accounting for uncertainty in human driver actions without unduly impacting planner performance. This paper introduces a minimally-interventional safety controller operating within an autonomous vehicle control stack with the role of ensuring collision-free interaction with an externally controlled (e.g., human-driven) counterpart while respecting static obstacles such as a road boundary wall. We leverage reachability analysis to construct a real-time (100Hz) controller that serves the dual role of (1) tracking an input trajectory from a higher-level planning algorithm using model predictive control, and (2) assuring safety through maintaining the availability of a collision-free escape maneuver as a persistent constraint regardless of whatever future actions the other car takes. A full-scale steer-by-wire platform is used to conduct traffic weaving experiments wherein two cars, initially side-by-side, must swap lanes in a limited amount of time and distance, emulating cars merging onto/off of a highway. We demonstrate that, with our control stack, the autonomous vehicle is able to avoid collision even when the other car defies the planner’s expectations and takes dangerous actions, either carelessly or with the intent to collide, and otherwise deviates minimally from the planned trajectory to the extent required to maintain safety.

@article{LeungSchmerlingEtAl2019, author = {Leung, K. and Schmerling, E. and Zhang, M. and Chen, M. and Talbot, J. and Gerdes, J. C. and Pavone, M.}, title = {On Infusing Reachability-Based Safety Assurance within Planning Frameworks for Human-Robot Vehicle Interactions}, journal = {{Int. Journal of Robotics Research}}, year = {2020}, volume = {39}, issue = {10--11}, pages = {1326--1345}, url = {/wp-content/papercite-data/pdf/Leung.Schmerling.ea.IJRR19.pdf}, timestamp = {2020-10-13} }